Software-defined video production – Utilizing Public 5G Networks for Multi-Access Edge (MEC) Architectures

The sports industry is always looking for ways to reduce video delays to improve the experience for viewers, fans, and players. 5G networks offer a solution with the capability to deliver high bandwidth and reliability, as outlined next:

Mixing: combining various audio and video sources to produce a cohesive program output. Traditionally, large and complex hardware mixers were used to achieve this. These days, virtualized software-based mixers can seamlessly blend live feeds, replay tracks, commentary audio, ambient stadium sounds, overlay graphics, and so on.

Switching: The act of selecting between multiple video feeds. During a live sports event, multiple cameras capture the action from various angles. A video switcher decides, in real time, which camera feed is broadcasted. This determines what the viewer sees at any given moment, whether it’s a close-up of a player, an overview of the field, or a replay.

Routing: Directing video and audio signals from their source to their intended destination. This might involve sending a camera feed to a monitor in the control room or routing an audio signal to a commentator’s headset. In large-scale sports events, routing becomes complex due to the sheer number of sources and destinations involved.

Transcoding: The process of converting video and audio files from one format to another. With audiences consuming sports content on diverse devices – from high-definition televisions to smartphones – broadcasters need to ensure their content is compatible with all these platforms. Transcoding in real time is crucial for live sports events.

By virtualizing these functions into software running in the cloud, we gain all of the typical advantages any application does. The focus here tends to be on paying only for what you use and having access to the latest hardware/software without constantly having to make large capital purchases for upgrades to specialized equipment.

The problem with doing this historically has been a high sensitivity to latency by these functions. Think about something simple such as synchronization of audio and video feeds. It doesn’t take much of a time offset for it to look strange to you as a viewer. This is why even in the era of virtualized video production, crews show up to large sporting events with a portable editing facility. This includes a mini-data center full of rackmount servers in a shipping container towed by an 18-wheeler. Content produced on-site was then sent through expensive satellite uplinks for distribution:

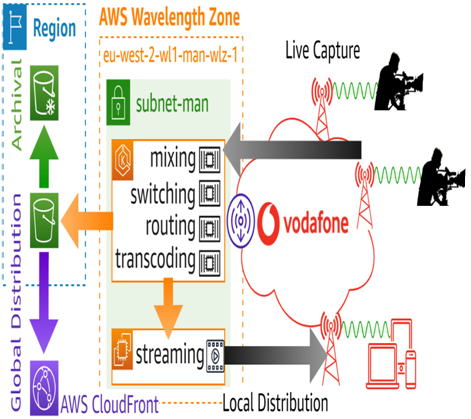

Figure 10.11 – Live video production at the edge with AWS Wavelength

Now, as illustrated in the preceding diagram, 5G-capable cameras and microphones send content straight to the AWS Wavelength Zone – no on-site production facility is needed to maintain the 1-2 ms latency requirement.

There, we have some containers running virtualized video production functions on a set of g4dn.2xlarge worker nodes, which enable GPU acceleration of this processing. This is where localized elements such as captions and unique graphics are added. Tasks such as switching and editing are carried out here as well. Content is then either streamed directly to viewers or sent to the cloud for broader distribution through Amazon CloudFront.

To expand on this, fans might opt for personalized viewing options. In-stadium, they could select the camera angles they prefer on their phones and even have AR elements on the video. Additionally, fans attending in person could have their own “fan view” included in the main broadcast.

Summary

In this chapter, we covered a number of 5G-based MEC solutions, delving into the capabilities and potential of AWS Wavelength for public edge solutions, and AWS Private 5G for private implementations. Our exploration of the observability, security, and capacity challenges faced by Wi-Fi networks, as compared to 5G, painted a picture of the strengths and limitations of each technology.

We discussed the capabilities of CV and robotics, empowered by 5G’s speed and low latency. The portion covering V2X communication showcased the importance of seamless and rapid communication in enhancing vehicular safety and functionality. The section on software-defined video production shed light on how 5G is reshaping content creation, providing faster, more efficient ways to produce and distribute video.

In the next chapter, we’ll dive into how to address the requirements of immersive experiences (AR/VR) using AWS services.